Issue: vCenter 7 and vSAN – Unable to Extract Requested Data (Trust Anchor Errors)

Issue and Background

After a failed attempt to rename ESXi hostnames and regenerate certificates, the same variation of vSAN errors occurs, denying access to all monitoring and other interactions via the vSphere Web Client. The available KBs on this topic (KB55092, KB2148196) only provide a glimpse of hope that this issue was once known and could have been resolved by a simple update in vCenter v6.x, and yet the early adopter’s life in vCenter 7 does not yet provide any such support.

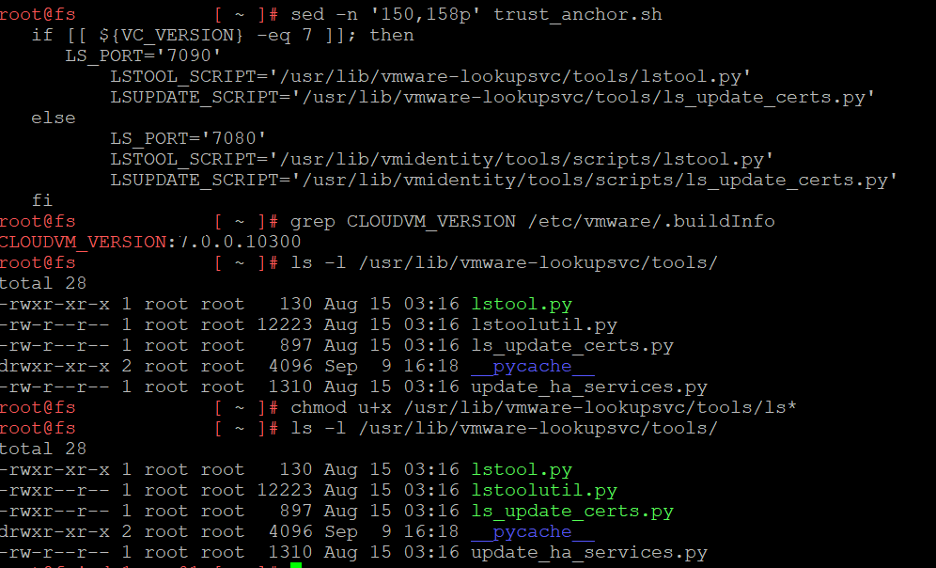

Below are two symptoms of the issue in terms of error messages, as found when navigating the vSphere Web Client on vCenter.

vSAN diagnostics claim that the cluster is healthy (via the command line) and the VMs are working. Unfortunately, the vSAN cluster cannot otherwise be managed, and we were blocked from adding additional capacity disks to the cluster, creating a real issue.

This article will walk through resolving the issue (which ultimately was caused by certificate problems). This walkthrough will require administrative access to the VCSA appliance via SSH.

Disclaimer: Follow these instructions at your own risk, they are provided without warranty. Ferroque Systems nor its affiliates will be held liable for unanticipated impacts in your environment from running its commands. We strongly recommend taking a snapshot, clone, or VM backup of the VCSA prior to executing these commands.

Resolution

For this tutorial, I’ll be using vCenter 7 with an embedded PSC (Platform Services Contoller), and as shown below none of the vSAN monitors are displaying as expected. We’ll need to check in the vSphere client logs to try and pinpoint the cause of this problem.

Step 1

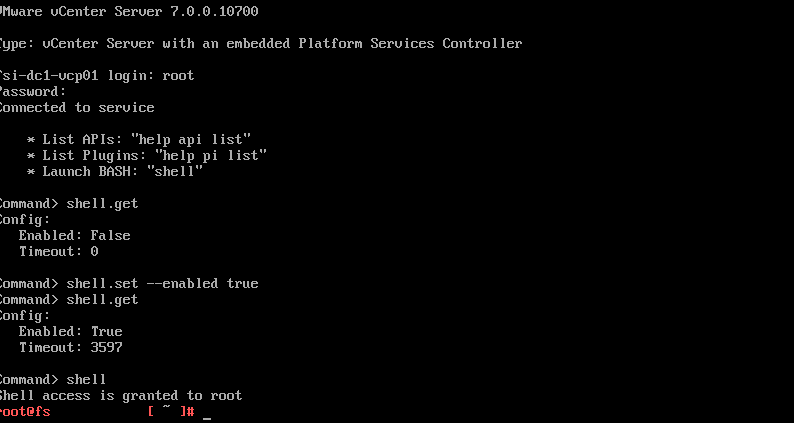

SSH into VCSA via the root account and enter shell mode.

set -–enabled true

The status of shell can be viewed with:

shell.get

Confirm in the previously mentioned Sphere client logs that there is a thumbprint issue with certificates.

vim +/thumbprint /var/log/vmware/vsphere-ui/logs/vsphere_client_virgo.log

This command opens vim and searches for the first occurrence of “thumbprint” in the vsphere_client_virgo.log file. Press “n” to go forwards and “N” to search backward, and press the keystroke sequence of “Esc” and “:q!” to exit vim by quitting without saving. Note the failed errors in the screenshot below as evidence.

Step 2

To remedy those two errors and get vSAN working again, download this script:

https://web.vmware-labs.com/scripts/check-trust-anchors

This script makes use of the built-in VMware Python scripts lstool.py and ls_update_certs.py.

Copy and paste the script from the site into a filename of your choice, then give the file executable permissions via chmod.

chmod u+x check-trust-anchors ./check-trust-anchors -cml

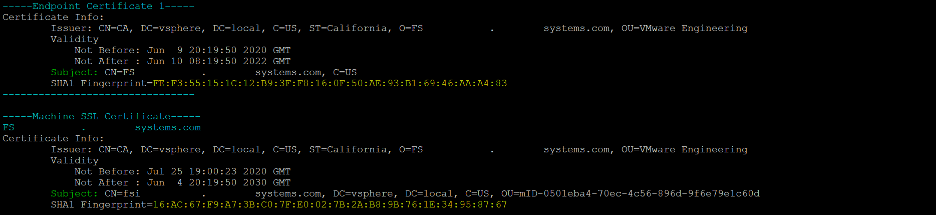

Run the script with options -cml to colorize the output, display information about the machine SSL certificate, and check the current machine SSL certificates. The cause of this issue is that the endpoint certificate fingerprint doesn’t match the machine SSL certificate. To view all the endpoint URIs associated with the mismatched certificate, run the script with the -e switch appended.

Step 3

VCSA 7 moved two important files for this script into a different directory. Locating these files are important as the script won’t be able to fix the certificate issues if these two flies aren’t able to be referenced by the executable, nor will it provide any error message.

If you copy and pasted the script, run this sed command to view the locations of those two files based upon the version of VCSA.

sed -n ‘150,158p’ check-trust-anchor.sh

If you are unsure which version you’re running, this command will output the exact version.

grep CLOUDVM_VERSION /etc/vmware/.buildinfo

In my instance, I have VCSA 7.0.0.10300 running, so I’ll need to check the locations in the first block of the subsection outputted.

ls -l /usr/lib/vmware-lookupsvc/tools/

50% of the files needed are executable, so finish off the rest with chmod u+x. This action can be reversed with chmod u-x or chmod 544.

chmod u+x /usr/lib/vmware-lookupsvc/tools/ls*

Step 4

Now that the prerequisites are completed, run the command again with the –fix or -f option appended to fix the certificate mismatch and regain control of the vSAN.

./check-trust-anchor -cml –-fix

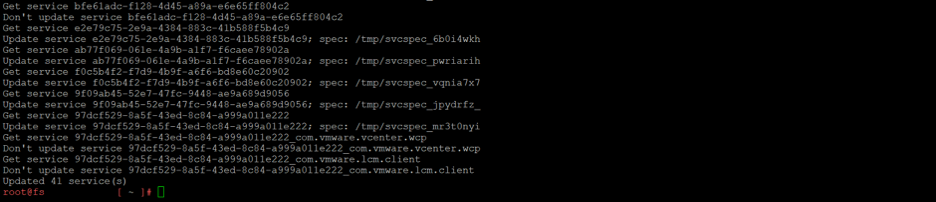

When prompted, click ‘Y’ to update the trust anchors.

The default SSO admin is fine; press enter to continue.

The fingerprint that needs to be changed is the endpoint; copy and paste that in.

Enter the FQDN of the same endpoint; in this case it is FS.systems.com.

The script will finish once the available services are updated.

Re-run the check-trust-anchor script to confirm that both fingerprints match with the -e switch appended to confirm all endpoint URIs have successfully added.

./check-trust-anchor -cmle

Now, return to the web browser and regain control of vSAN.

Conclusion

As you can see, knowledge of certain specific scripts and command-line utilities can be used to resolve this issue, which can otherwise be fairly complex to troubleshoot and resolve. For this reason, it is important for VMware administrators to not only be familiar with using the traditional GUI-based vSphere console, but to also be comfortable using command-line utilities to help troubleshoot and resolve certain issues that can sometimes occur. At Ferroque Systems, along with other solutions, we specialize in designing and implementing virtualization solutions (for example, using Citrix, VMware, and/or Microsoft), and our knowledge and field experience enables us to bring such expertise to bear for the benefit of our customers.

-

Michael Wieloch

Michael WielochMichael is a multi-disciplinary consultant with expertise in digital workspaces, networking, security, virtualization, DevOps, and Linux. When not engineering systems or building ZFS clusters, he enjoys cruising the streets on his motorcycle.

I have just updated my VCSA to 7.0.1a (build 17005016) and immediately lost access to the vSAN portions of the HTML5 interface – they simply remain blank, except for the Capacity section which displays the “Failed to extract the requested data” error. Perhaps coincidentally it also refuses to display the EULA when trying to install patches via the life cycle manager so it’s not possible to update my hosts either. However when I get to the point of running your script for the first time, it reports matching Machine and Endpoint certificates so I am unsure how to proceed. Do… Read more »

Hi Matt, When you look for the vSAN health properties through command line does it function as usual? A command like “esxcli vsan health <cluster_name> list” should report everything as normal if this is a similar issue. The other possibility is through the vCenter management interface (port 5480) that some of the services where either healthy with warnings or some just flat out stopped. If possible, can you regenerate a new machine cert and have the script iterate through each endpoint after making a snapshot to see if there is any difference. I only had issues with the HTML client… Read more »

Thank you very much! Awesome tutorial – worked like a charm!

Thanks for the post – worked like a charm

Thanks, incredible work 😉

On step 2 I am only getting a Machine SSL cert and no Endpoint cert. But the other symptoms are exactly what you have here. Any ideas?

VCSA had its certs expire. So, I renewed them. Them I upgraded it to 7.0.3e hoping that might have some effect. VSAN still remains stubbornly not reachable through the GUI. Through the command line it is still fine. Hosts are 7.0.1

I am having the same problem. Did you ever find the endpoint cert?

Worked perfectly for: 7.0.3.00700

I was seeing this issue trying to view Skyline health for vCenter itself and the connectd esxi hosts. Had the issue for awhile now after an update a few versions back, finally decided to address it.

Thank you for the fix!

This was an amazing walkthrough and a lifesaver for resolving our vSAN and QuickStart access issue. After replacing the self-signed certificate with one of our own CA certs, this problem crept in. Unfortunately, we had a lot of other issues happening at the same time so we could never pin down what started this. Thanks to vCenter’s cryptic error messages, we just assumed it was related to a failing vSAN cluster (hardware) – no idea it was certificate thumbprint related. Thank you so much!

Worked perfect for our 7.0.1 build with 2 Cert endpoints mismatched. Thanks!

Awesome ! Thanks !!